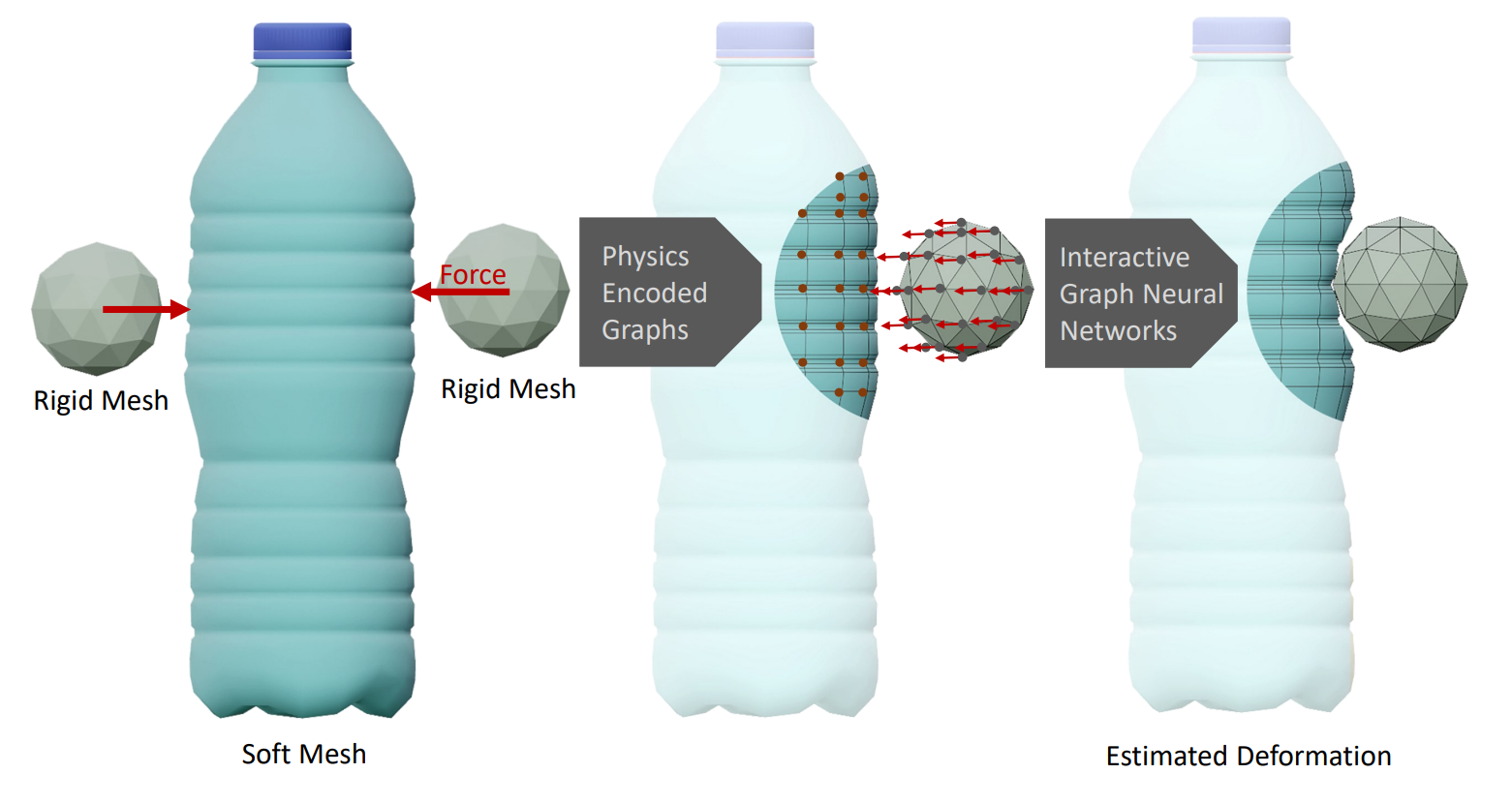

In the field of robotics, it's important to understand object deformation during tactile interactions. Precise understanding of deformation can elevate robotic simulations and have broad implications across different industries. We introduce a method using Physics-Encoded Graph Neural Networks (GNNs) for such predictions. Similar to robotic grasping and manipulation scenarios, we focus on modeling the dynamics between a rigid mesh contacting a deformable mesh under external forces. Our approach represents both the soft body and the rigid body within graph structures, where nodes hold physical states of the meshes. We also incorporate cross-attention mechanisms to captures the interplay between the objects. By jointly learning the geometry and physics, our model reconstructs consistent and detailed deformations. We release the code alongside the dataset designed to analyze the geometry and physical properties of deformable everyday objects, improving robotic simulation and grasping research.

@article{saleh2024physics,

title={Physics-Encoded Graph Neural Networks for Deformation Prediction under Contact},

author={Saleh, Mahdi and Sommersperger, Michael and Navab, Nassir and Tombari, Federico},

journal={arXiv preprint arXiv:2402.03466},

year={2024}

}